Recently there has been a lot of talk of self-driving cars and the influence that these innovative new pieces of technology will have on society. Although Google’s goal was to create a driverless car that would solve all motoring problems, there are a few moral issues that may end up causing more problems than needed. This, of course, is all part of the research, but begs the question, “Will a computer make a better driver than a human being?”

Google claim that in the six years of partaking in this project with their fleet of just over 20 cars, the self-driving car has been involved in 11 accidents – all of which have been caused by other drivers. Whilst the statement, “If you spend enough time on the road, accidents will happen whether you’re in a car or a self-driving car” from Medium.com is true, 11 accidents in six years is somewhat excessive, even when spread between 20 cars.

Research shows that the average driver is expected to be involved in a collision once every 17.9 years, a statistic that differs significantly from the collision results taken from the driverless car. Is this new car created by Google really blameless in these accidents?

One of the major questions regarding this new technology is how insurance companies will decide who is at fault when collisions occur, and who is to blame if the the accident was in fact caused by a self-driving car.

Job roles

The Society of Motor Manufacturers and Traders has published statistics that say the number of people employed in the motor business, currently at 731,000 in the UK, will increase by almost 50% once driverless cars are released for public use. But where have these figures come from? There have been no statistics to show who or how many people would even be interested in using self-driving cars.

An issue that has arisen numerous times before, and will continue to haunt us as technology advances, is whether or not we should be implementing machines to take over jobs that humans can do. In Britain alone, we are estimated to employ one million full or part-time workers who use cars, taxis, coaches and lorries as part of their job. The shameful fact is that all or the majority of these hard-working citizens will lose their job as soon as autonomous cars are implemented in this country.

With no statistics or research on how popular or useful these cars will be, a concern has risen in regards to the loss of job opportunities for taxi drivers and chauffeurs across the world and whether this loss is justified.

Laws

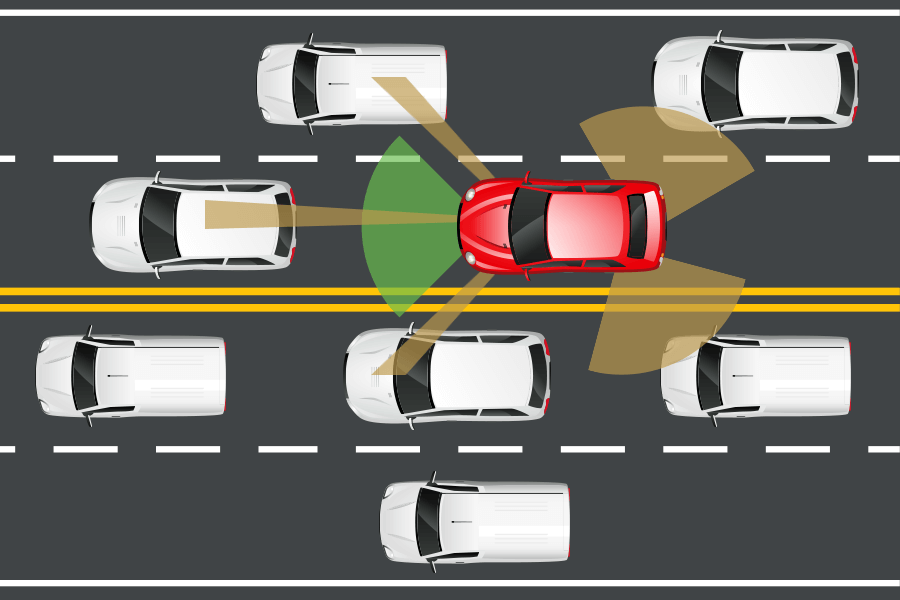

A key element of driving involves abiding to road laws in order to avoid accidents, but what happens when these rules become a little hazy? There is never a clear cut when it comes to right and wrong, so what happens if a driverless car is overtaking a cyclist and is required to very briefly breach the road speed limit as to not collide with oncoming traffic? At a crossroads, another driver may flash their lights or raise their hand in order to signal for you to turn first, but is this something that would be picked up by a self-driving car?

Of course, with different countries come different laws and rules of the road which is something that will need to be taken into account when the autonomous cars are released for the public to use. This will become even more complicated when it comes to the states of America who often work under different laws to each other.

Job roles

And finally, the moral dilemma that has been keeping scientists awake at night – will these autonomous cars be able to make moral decisions, and how accurately do these reflect the decisions of humans? This is an issue that adds even more complications to the Trolley Problem, as the self-driving car will need to interact with other vehicles continuously. Where one human being may make a certain decision in order to avoid hurting a group of people in an accident, another human may make a different decision.

There are many different factors that drivers have to take into consideration when on the road, and when the awful occurrence of a fatal crash is brought to the table, the driver of a vehicle may have to make some important decisions.

For example, would a self-driving car be able to make an acceptable moral conclusion if faced with a decision of whether to kill a group of four elderly people or two toddlers, for want of a better example? The car would just see a group of four humans and a group of two humans – so is the theory that causing harm to less people is better than more people completely true? And can an autonomous car adapt to this? While these are decisions that we don’t like to think about, programmers will need to be making these decisions so that the driverless cars will be able to act in these circumstances.